Data is the lifeblood of a company. If their data was completely lost, a company could be at risk of going out of business. If the underlying storage performs too slowly, the company will not be able to compete successfully with competitors that can store and retrieve data quicker. This is an ever increasing requirement that is pushing IT departments to investigate more highly available, highly performant, and easily scalable storage. However, there is a decline in the traditional storage array market, in spite of these increasing demands [Gartner Magic Quadrant for General Purpose Disk Arrays, 2017]. One of the reasons is that many customers are turning to much more flexible solutions, like software-defined storage and hyperconvergence, that can use wide scalability and data locality to solve the availability and performance needs of the business.

Not all solutions are created the same, so it is important to ensure that writes and reads will occur as quickly as possible, while also ensuring their long-term integrity and availability within the system. Some companies have taken shortcuts in this formula (i.e. sacrificing data integrity to improve performance). HPE SimpliVity powered by Intel® takes speed, long-term availability, and integrity seriously, and works to ensure that the data is well protected before acknowledging the write back to the virtual machine (VM) without sacrificing performance. This post will walk through the process of writing a block of data within the HPE SimpliVity solution and highlight key features along the way.

Not all solutions are created the same, so it is important to ensure that writes and reads will occur as quickly as possible, while also ensuring their long-term integrity and availability within the system. Some companies have taken shortcuts in this formula (i.e. sacrificing data integrity to improve performance). HPE SimpliVity powered by Intel® takes speed, long-term availability, and integrity seriously, and works to ensure that the data is well protected before acknowledging the write back to the virtual machine (VM) without sacrificing performance. This post will walk through the process of writing a block of data within the HPE SimpliVity solution and highlight key features along the way.

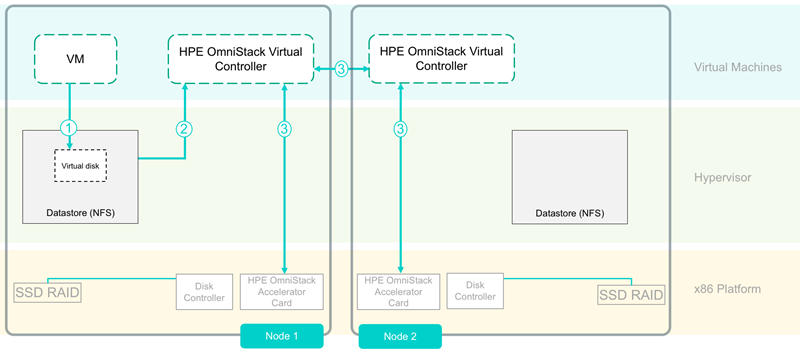

When the operating system inside a VM writes a block to a virtual disk, the blocks are processed through an NFS share presented off the HPE OmniStack Virtual Controller. The HPE OmniStack Virtual Controller is a VM that runs on every HPE SimpliVity 380 node and contains the software stack that is the foundation of the Data Virtualization Platform. The processing capabilities within the HPE OmniStack Virtual Controller is augmented by the PCIe-based HPE OmniStack Accelerator Card, allowing the system to perform inline deduplication and compression without the typical latency penalties.

The Virtual Controller first commits the block to random-access memory (RAM), which is backed by flash storage and a bank of super capacitors, all located on the HPE OmniStack Accelerator Card, to prevent data loss in the event of a power loss. At the same time, the data is sent to the Virtual Controller on a secondary node — automatically defined per VM — to be synchronously committed to its Accelerator Card. Immediately after commitment to the Accelerator Card, the process for deduplication and compression begins independently on each node.

Figure 1: Data is written through the NFS data store presented by the HPE OmniStack Virtual Controller and commited to the HPE OmniStack Accelerator Card on two different nodes.

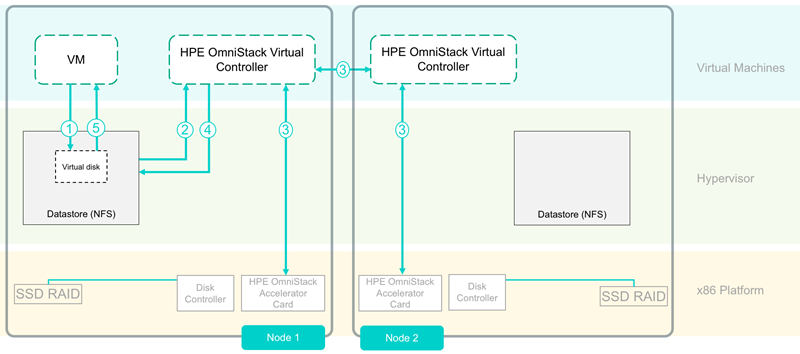

At this point, the data has been stored on the Accelerator Card on two nodes, and is therefore protected from data center power loss and the permanent loss of an entire node. An acknowledgement is now sent back to the hypervisor and then to the VM. The write process is complete from the guest operating system’s point of view.

Figure 2: After processing the block on both nodes, the write is acknowledged back to the hypervisor and VM, completing the VM’s write operation.

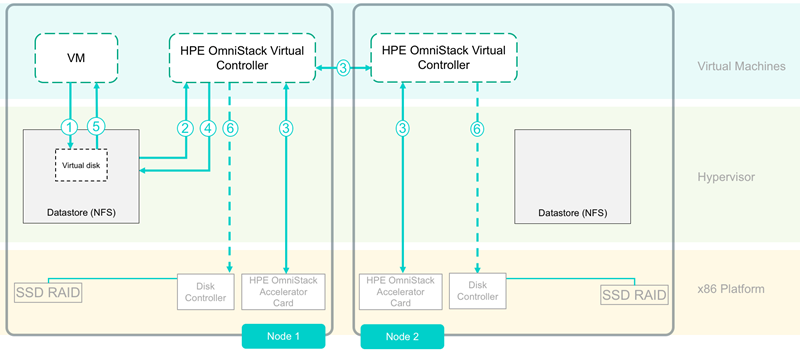

Independently, the HPE OmniStack Virtual Controllers on each node work with any compressed unique blocks to combine them together to create an efficient write for the RAID-protected disks. Once a full stripe has been created, the blocks are written to the disks. Write I/O has been reduced to the disks due to the deduplication, compression, and the optimization of the blocks.

Figure 3: Independent of the write acknowledgement, the HPE OmniStack Virtual Controller creates an optimized write for the RAID-protected disks and commits to disk the unique and optimized blocks.

Overall, this process creates an efficient, safe, and protected platform for storing data. With deduplication at the core of the HPE SimpliVity Data Virtualization Platform, every HPE SimpliVity hyperconverged infrastructure gains capacity savings and I/O efficiency with no degradation of performance while keeping the data well protected.

This technical whitepaper discusses the HPE SimpliVity architecture in even more depth, and reveals how our customers can achieve the kind of high availability and and performance through data efficiency that traditional data centers can’t match: HPE SimpliVity hyperconverged infrastructure for VMware vSphere